cleanlab

开源工具自动检测和优化机器学习数据集

cleanlab是一款开源的数据中心AI工具包,能够自动检测机器学习数据集中的标签错误、异常值和重复项等问题。该工具适用于图像、文本和表格等各类数据,并支持所有机器学习模型。除了发现数据问题,cleanlab还可以训练更稳健的模型,评估数据质量。基于可靠的理论基础,cleanlab运行高效,操作简便,是优化数据质量和提升模型性能的实用工具。

cleanlab helps you clean data and labels by automatically detecting issues in a ML dataset. To facilitate machine learning with messy, real-world data, this data-centric AI package uses your existing models to estimate dataset problems that can be fixed to train even better models.

<p align="center"> <img src="https://raw.githubusercontent.com/cleanlab/assets/master/cleanlab/datalab_issues.png" width=74% height=74%> </p> <p align="center"> Examples of various issues in Cat/Dog dataset <b>automatically detected</b> by cleanlab via this code: </p>lab = cleanlab.Datalab(data=dataset, label="column_name_for_labels") # Fit any ML model, get its feature_embeddings & pred_probs for your data lab.find_issues(features=feature_embeddings, pred_probs=pred_probs) lab.report()

- Use cleanlab to automatically check every: image, text, audio, or tabular dataset.

- Use cleanlab to automatically: detect data issues (outliers, duplicates, label errors, etc), train robust models, infer consensus + annotator-quality for multi-annotator data, suggest data to (re)label next (active learning).

Try easy mode with Cleanlab Studio

While this open-source package finds data issues, its utility depends on you having: a good existing ML model + an interface to efficiently fix these issues in your dataset. Providing all these pieces, Cleanlab Studio is a Data Curation platform to find and fix problems in any {image, text, tabular} dataset. Cleanlab Studio automatically runs optimized algorithms from this package on top of AutoML & Foundation models fit to your data, and presents detected issues (+ AI-suggested fixes) in an intelligent data correction interface.

Try it for free! Adopting Cleanlab Studio enables users of this package to:

- Work 100x faster (1 min to analyze your raw data with zero code or ML work; optionally use Python API)

- Produce better-quality data (10x more types of issues auto detected & corrected via built-in AI)

- Accomplish more (auto-label data, deploy ML instantly, audit LLM inputs/outputs, moderate content, ...)

- Monitor incoming data and detect issues in real-time (integrate your data pipeline on an Enterprise plan)

Run cleanlab open-source

This cleanlab package runs on Python 3.8+ and supports Linux, macOS, as well as Windows.

- Get started here! Install via

piporconda. - Developers who install the bleeding-edge from source should refer to this master branch documentation.

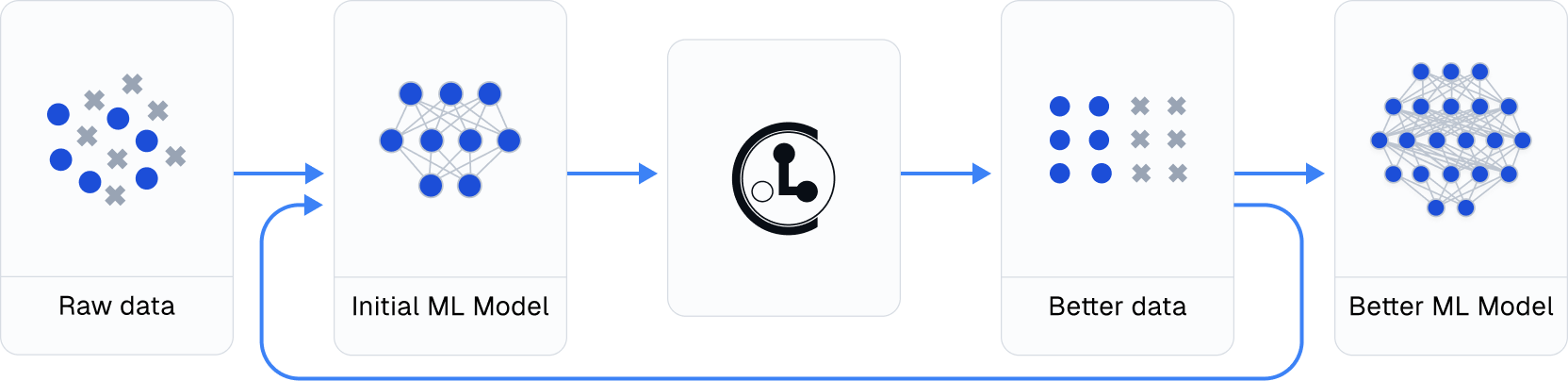

Practicing data-centric AI can look like this:

- Train initial ML model on original dataset.

- Utilize this model to diagnose data issues (via cleanlab methods) and improve the dataset.

- Train the same model on the improved dataset.

- Try various modeling techniques to further improve performance.

Most folks jump from Step 1 → 4, but you may achieve big gains without any change to your modeling code by using cleanlab! Continuously boost performance by iterating Steps 2 → 4 (and try to evaluate with cleaned data).

Use cleanlab with any model and in most ML tasks

All features of cleanlab work with any dataset and any model. Yes, any model: PyTorch, Tensorflow, Keras, JAX, HuggingFace, OpenAI, XGBoost, scikit-learn, etc.

cleanlab is useful across a wide variety of Machine Learning tasks. Specific tasks this data-centric AI package offers dedicated functionality for include:

- Binary and multi-class classification

- Multi-label classification (e.g. image/document tagging)

- Token classification (e.g. entity recognition in text)

- Regression (predicting numerical column in a dataset)

- Image segmentation (images with per-pixel annotations)

- Object detection (images with bounding box annotations)

- Classification with data labeled by multiple annotators

- Active learning with multiple annotators (suggest which data to label or re-label to improve model most)

- Outlier detection (identify atypical data that appears out of distribution)

For other ML tasks, cleanlab can still help you improve your dataset if appropriately applied. See our Example Notebooks and Blog.

So fresh, so cleanlab

Beyond automatically catching all sorts of issues lurking in your data, this data-centric AI package helps you deal with noisy labels and train more robust ML models. Here's an example:

# cleanlab works with **any classifier**. Yup, you can use PyTorch/TensorFlow/OpenAI/XGBoost/etc. cl = cleanlab.classification.CleanLearning(sklearn.YourFavoriteClassifier()) # cleanlab finds data and label issues in **any dataset**... in ONE line of code! label_issues = cl.find_label_issues(data, labels) # cleanlab trains a robust version of your model that works more reliably with noisy data. cl.fit(data, labels) # cleanlab estimates the predictions you would have gotten if you had trained with *no* label issues. cl.predict(test_data) # A universal data-centric AI tool, cleanlab quantifies class-level issues and overall data quality, for any dataset. cleanlab.dataset.health_summary(labels, confident_joint=cl.confident_joint)

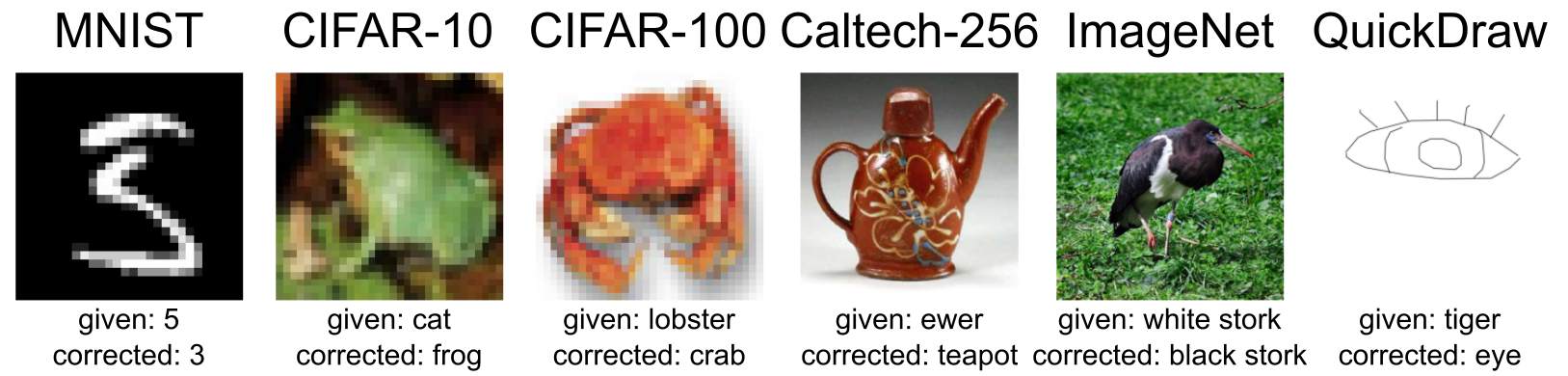

cleanlab cleans your data's labels via state-of-the-art confident learning algorithms, published in this paper and blog. See some of the datasets cleaned with cleanlab at labelerrors.com.

cleanlab is:

- backed by theory -- with provable guarantees of exact label noise estimation, even with imperfect models.

- fast -- code is parallelized and scalable.

- easy to use -- one line of code to find mislabeled data, bad annotators, outliers, or train noise-robust models.

- general -- works with any dataset (text, image, tabular, audio,...) + any model (PyTorch, OpenAI, XGBoost,...) <br/>

Citation and related publications

cleanlab is based on peer-reviewed research. Here are relevant papers to cite if you use this package:

<details><summary><a href="https://arxiv.org/abs/1911.00068">Confident Learning (JAIR '21)</a> (<b>click to show bibtex</b>) </summary>@article{northcutt2021confidentlearning,

title={Confident Learning: Estimating Uncertainty in Dataset Labels},

author={Curtis G. Northcutt and Lu Jiang and Isaac L. Chuang},

journal={Journal of Artificial Intelligence Research (JAIR)},

volume={70},

pages={1373--1411},

year={2021}

}

@inproceedings{northcutt2017rankpruning,

author={Northcutt, Curtis G. and Wu, Tailin and Chuang, Isaac L.},

title={Learning with Confident Examples: Rank Pruning for Robust Classification with Noisy Labels},

booktitle = {Proceedings of the Thirty-Third Conference on Uncertainty in Artificial Intelligence},

series = {UAI'17},

year = {2017},

location = {Sydney, Australia},

numpages = {10},

url = {http://auai.org/uai2017/proceedings/papers/35.pdf},

publisher = {AUAI Press},

}

@inproceedings{kuan2022labelquality,

title={Model-agnostic label quality scoring to detect real-world label errors},

author={Kuan, Johnson and Mueller, Jonas},

booktitle={ICML DataPerf Workshop},

year={2022}

}

@inproceedings{kuan2022ood,

title={Back to the Basics: Revisiting Out-of-Distribution Detection Baselines},

author={Kuan, Johnson and Mueller, Jonas},

booktitle={ICML Workshop on Principles of Distribution Shift},

year={2022}

}

@inproceedings{wang2022tokenerrors,

title={Detecting label errors in token classification data},

author={Wang, Wei-Chen and Mueller, Jonas},

booktitle={NeurIPS Workshop on Interactive Learning for Natural Language Processing (InterNLP)},

year={2022}

}

@inproceedings{goh2022crowdlab,

title={CROWDLAB: Supervised learning to infer consensus labels and quality scores for data with multiple annotators},

author={Goh, Hui Wen and Tkachenko, Ulyana and Mueller, Jonas},

booktitle={NeurIPS Human in the Loop Learning Workshop},

year={2022}

}

@inproceedings{goh2023activelab,

title={ActiveLab: Active Learning with Re-Labeling by Multiple Annotators},

author={Goh, Hui Wen and Mueller, Jonas},

booktitle={ICLR Workshop on Trustworthy ML},

year={2023}

}

@inproceedings{thyagarajan2023multilabel,

title={Identifying Incorrect Annotations in Multi-Label Classification Data},

author={Thyagarajan, Aditya and Snorrason, Elías and Northcutt, Curtis and Mueller, Jonas},

booktitle={ICLR Workshop on Trustworthy ML},

year={2023}

}

@inproceedings{cummings2023drift,

title={Detecting Dataset Drift and Non-IID Sampling via k-Nearest Neighbors},

author={Cummings, Jesse and Snorrason, Elías and Mueller, Jonas},

booktitle={ICML Workshop on Data-centric Machine Learning Research},

year={2023}

}

@inproceedings{zhou2023errors,

title={Detecting Errors in Numerical Data via any Regression Model},

author={Zhou, Hang and Mueller, Jonas and Kumar, Mayank and Wang, Jane-Ling and Lei, Jing},

booktitle={ICML Workshop on Data-centric Machine Learning Research},

year={2023}

}

@inproceedings{tkachenko2023objectlab,

title={ObjectLab: Automated Diagnosis of Mislabeled Images in Object Detection Data},

author={Tkachenko, Ulyana and Thyagarajan, Aditya and Mueller, Jonas},

booktitle={ICML Workshop on Data-centric Machine Learning Research},

编辑推荐精选

扣子-AI办公

职场AI,就用扣子

AI办公助手,复杂任务高效处理。办公效率低?扣子空间AI助手支持播客生成、PPT制作、网页开发及报告写作,覆盖科研、商业、舆情等领域的专家Agent 7x24小时响应,生活工作无缝切换,提升50%效率!

堆友

多风格AI绘画神器

堆友平台由阿里巴巴设计团队创建,作为一款AI驱动的设计工具,专为设计师提供一站式增长服务。功能覆盖海量3D素材、AI绘画、实时渲染以及专业抠图,显著提升设计品质和效率。平台不仅提供工具,还是一个促进创意交流和个人发展的空间,界面友好,适合所有级别的设计师和创意工作者。

码上飞

零代码AI应用开发平台

零代码AI应用开发平台,用户只需一句话简单描述需求,AI能自动生成小程序、APP或H5网页应用,无需编写代码。

Vora

免费创建高清无水印Sora视频

Vora是一个免费创建高清无水印Sora视频的AI工具

Refly.AI

最适合小白的AI自动化工作流平台

无需�编码,轻松生成可复用、可变现的AI自动化工作流

酷表ChatExcel

大模型驱动的Excel数据处理工具

基于大模型交互的表格处理系统,允许用户通过对话方式完成数据整理和可视化分析。系统采用机器学习算法解析用户指令,自动执行排序、公式计算和数据透视等操作,支持多种文件格式导入导出。数据处理响应速度保持在0.8秒以内,支持超过100万行数据的即时分析。

TRAE编程

AI辅助编程,代码自动修复

Trae是一种自适应的集成开发环境(IDE),通过自动化和多元协作改变开发流程。利用Trae,团队能够更快速、精确地编写和部署代码,从而提高编程效率和项目交付速度。Trae具备上下文感知和代码自动完成功能,是提升开发效率的理想工具。

AIWritePaper论文写作

AI论文写作指导平台

AIWritePaper论文写作是一站式AI论文写作辅助工具,简化了选题、文献检索至论文撰写的整个过程。通过简单设定,平台可快速生成高质量论文大纲和全文,配合图表、参考文献等一应俱全,同时提供开题报告和答辩PPT等增值服务,保障数据安全,有效提升写作效率和论文质量。

博思AIPPT

AI一键生成PPT,就用博思AIPPT!

博思AIPPT,新一代的AI生成PPT平台,支持智能生成PPT、AI美化PPT、文本&链接生成PPT、导入Word/PDF/Markdown文档生成PPT等,内置海量精美PPT模板,涵盖商务、教育、科技等不同风格,同时针对每个页面提供多种版式,一键自适应切换,完美适配各种办公场景。

潮际好麦

AI赋能电商视觉革命,一站式智能商拍平台

潮际好麦深耕服装行业,是国内AI试衣效果最好的软件。使用先进AIGC能力为电商卖家批量提供优质的、低成本的商拍图。合作品牌有Shein、Lazada、安踏、百丽等65个国内外头部品牌,以及国内10万+淘宝、天猫、京东等主流平台的品牌商家,为卖家节省将近85%的出图成本,提升约3倍出图效率,让品牌能够快速上架。

推荐工具精选

AI云服务特惠

懂AI专属折扣关注微信公众号

最新AI工具、AI资讯

独家AI资源、AI项目落地

微信扫一扫关注公众号